Improve your Twitter bot with MongoDB

Photo by on

In we saw how to create a twitter bot that sends private messages to his followers, containing tweets searched by predefined criteria — certain hashtags selected by us. This time we will improve the reports being sent, by adding additional functionality. We will store the results in a database and filter the newly received tweets according to what we have stored. Thus, we will be able to send only (almost*) unique results.

TL;DR

This is the second part of a showing you how to create your own Twitter bot and host it on . This time we will upgrade our code by using and to produce better results. Also, we will extend the usage of our helpers, by extracting there the composing of the messages content. In the end, we will see how can we use hosting service (free plan) to host our database there.

If you want to skip right to the code, here is the .

Reasoning

What is the reason to do that?

Right now there are plenty of tutorials and articles about creating twitter bots out there, but I could not find any of them that extends the subject to the next level — offering direction for improving the way your bot handles the data.

That is why I decided to introduce a new player in the equation. Something that will help us to do more with our tweet reports — database.

Database

MongoDB is a famous database that has great integration with . It’s quick and easy to use, and it’s perfect for our purpose. We will use it to eliminate the duplicates from our feed, before sending the messages to the followers.

Step by step

If you want to follow through, here are the steps you may take:

- Register a new account in .

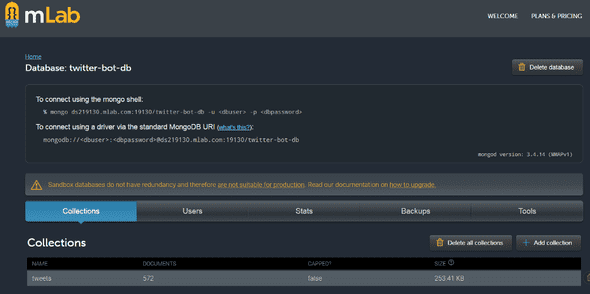

- Add your DBUSER and DBPASSWORD credentials to your .env file (same way as we did in the with the Twitter API keys).

- Create a new directory called “/db” in the root folder of your project and add a new file in it: db.js. Here is where we will put the logic for connecting with the database.

- At the beginning of the file, import — a object modeling tool, designed to work in an asynchronous environment. We will see a bit later how easy is to work with MongoDB when using mongoose and Node.js.

const mongoose = require('mongoose')- Next step is to compose our connection string. We need this, in order to be able to connect and consume our database. Here is how it should look like:

const dbURI = `mongodb://${process.env.DB_USER}:${process.env.DB_PASS}

@ds219130.mlab.com:19130/twitter-bot-db`- The rest of this is just simple logic coming from mongoose, related to setting up different listeners for different events that might occur. One of these events is “connected”, this tells us when we are successfully connected to our database.

- Setup your database model — in the same folder create a new file called tweets.model.js. Here is the logic behind our database model. A simple that serves as a blueprint for our database documents. It contains only four fields. The unique ID parameter called idstr. We will use that when checking for duplicates. The content (status) of the tweet, the author name and the creation date of a tweet — _createdat_. All of the fields are defined as .

const tweetSchema = new Schema({

id_str: {

type: String,

unique: true,

required: true

},

status: {

type: String,

required: true

},

author: {

type: String,

required: true

}

created_at: {

type: String,

required: true

})- The last step, for getting our database up and running, is to create our database controller. This file will contain the main logic for interacting with the database. Here we will put methods for reading and writing records from and into it. Also here, we will implement the functionality for getting only the unique tweets from each feed and record them for post usage. In order to start, create a new file in the “/db” folder, name it tweets.controller.js and paste in it. You probably have seen already, that in this file we have some of the methods exported and some of them defined as local constants. Following the best practices, we will be using only the exported ones in our main flow.

By defining the rest as local, we limit unnecessary exposure to the outer world.

Local methods, used only here:

const fetchTweets = function () { ... }

const recordTweet = function ({ id_str, text, user }) {...}Exported methods, used in :

module.exports.recordUniqueTweets = function (tweets) {...}

module.exports.getUniqueTweets = function (latestTweets) {...}Helpers

In my I mentioned how good is to extract or modularize functionality that can be reused in many other places. We did that with a method for getting the current time. This time we will add another method to , that we will be using for generating the messages content. If, for any reason, we don’t get any new tweets, we will send a message to the followers. Like this one:

`-— Nothing new this time (14.05.2018 14.02).`.Also, we are going to refactor a little bit the method for getting the current time. And then we will be able to use it, when composing the messages content, to add the date of when a tweet is created.

const from = this.getTime(tweet.created_at)Updating main flow

After we finish with the database related tasks, it’s time to amend our and make it start using the new setup. Actually, the change we need to do is really minor. We need to import db.js and tweets.controller.js files from “/db” folder like so:

require('./db/db')

const db = require('./db/tweets.controller')And then, in the callback of the first call that fetches the tweets, replace the forEach loop with the following:

db.getUniqueTweets(data.statuses).then((tweets) => {

const content = helpers.composeContent(tweets)

db.recordUniqueTweets(tweets)

...

})That’s all. 👌

You can find the final version of the code .

Hosting on Mlab

OK, now we are done with the coding and it’s time to publish our updated application and the database it uses. To do so, we are going to use for the database and, again, for the application itself.

If you need help for deploying the application with Heroku, you may take a look at the of this tutorial. Just don’t forget to add the new config variables to “Config Vars” section, under “Settings” tab.

In order to start using your database hosted on Mlab, you first need to login into your account and create a new database, using the control panel provided there.

Once created, just go to its settings and find the connection string that you need to add to your application.

If you have followed the coding part of this tutorial, you will have to add a collection into the database and name it ‘tweets’, in order to stay aligned with the code and get a working application in the end.

Once the database is properly created, the connection string is in place in your code and the application is deployed via Heroku, you are good to go! 🎆 🆒

Conclusion

This article serves two purposes. One is to be like a logical continuation of the first . Second is to show how easily we can use noSQL database, like MongoDB, with Node.js, and services, like Mlab and Heroku, to achieve an improved version of a simple Twitter bot application. It might worth mentioning, that this way we actually are creating our own database with twitter statuses, that later might be used for something else. Maybe some statistical research or material for machine learning, who knows. The best part of this is, that everything is happening automatically.

There will be always options. We just need to think about them.

Note: If you don’t want to use the user interface provided by Mlab, for interaction with the database, you can use other tools, such as .

Of course, any comments or questions are welcome.

🔥 Happy coding! 🔥

You can find my implementation here . If you want to start receiving the same reports as me, follow my bot in Twitter @HerrKlinkerhof3.

I am saying ‘almost’, because, in some cases, you might get tweets with the same content, but posted by different accounts. According to the implemented check these are different as they have different IDs, but still…

Oh… and thank you for reading. 😅